To begin this inquiry on public algorithms imaginaries, Design Friction hosted in November 2020 a workshop that gathered different profiles and experts from public services – decision-makers, algorithms coders, and users – as well as researchers from academia. This workshop allowed a ‘temperature check’ of the imaginaries inhabiting the minds of the participants.

Draw me an algorithm

The workshop was structured in two parts. The first part was aiming at gathering each participant’s definition of an algorithm, thus allowing to draw an outline of a shared definition of this term.

The definition given by each of the 8 participants was indicative of their area of expertise. The technical profiles suggested scientific definitions of an algorithm: a ‘calculation process’, a ‘series of logical and systematic operations’, a ‘composition of operating modes’, or a ‘series of instructions’. Other profiles, however, suggested definitions from a wider angle, mentioning their embodiment and implementation in our world. Hence the term ‘tool’ appeared, ‘not only technical’, but also ‘powerful’, in need for a ‘better regulation of its design and use’.

The sharing of each other’s definitions led to discussions and debates about the role of algorithms in public services, and shine a light on their benefits and inconveniences. Though the topic was not narrowed down to machine learning, the discussion quickly landed on this type of algorithm. By their capacity of quick and massive data analysis, algorithms are considered as the one and only viable way to enhance the legacy of data build by administrations and to allow their exploitation. Moreover, in all cases – even when algorithms take the form of mere Excel sheets – they are considered as a stopgap for the lack of human resources in the public sector, liberating precious time for the employees, therefore reassigned to other works with more added value.

As aware of these benefits as they were, the participants expressed critical thinking towards algorithms. They mentioned their ‘black box’ aura, and highlighted three points:

- A lack of transparency, coming from a lack of visibility on the processed data and the technical aspects of the algorithms, as well as on the interpretation of the legal measures and regulations in the computer code.

- As the public sector must be accounted for their decision process, if decisions are, as a whole or in part, made by algorithm, the incapacity of explaining them them puts the legitimacy of the deployment of this technology in jeopardy.

- The deployment of algorithms too often endorses existing processes, without questioning them, nor proposing an alternative. By their stiffness, algorithms inhibit adjustments from decisions made by the public.

Furthermore, the conversations revealed a fear of technological replacement, a sign of people’s apprehension for the algorithms to disparage the knowledge and expertise of many fields of work.

Algorithms uchronia:

What if we rethought an algorithm?

During the second part of the workshop, the participants were asked to reenact the design phase of an algorithm that caused a great deal of controversy in the UK in 2020: the A-level algorithm designed to assign high schoolers their exam grades.

DECONSTRUCT THE ALGORITHM…

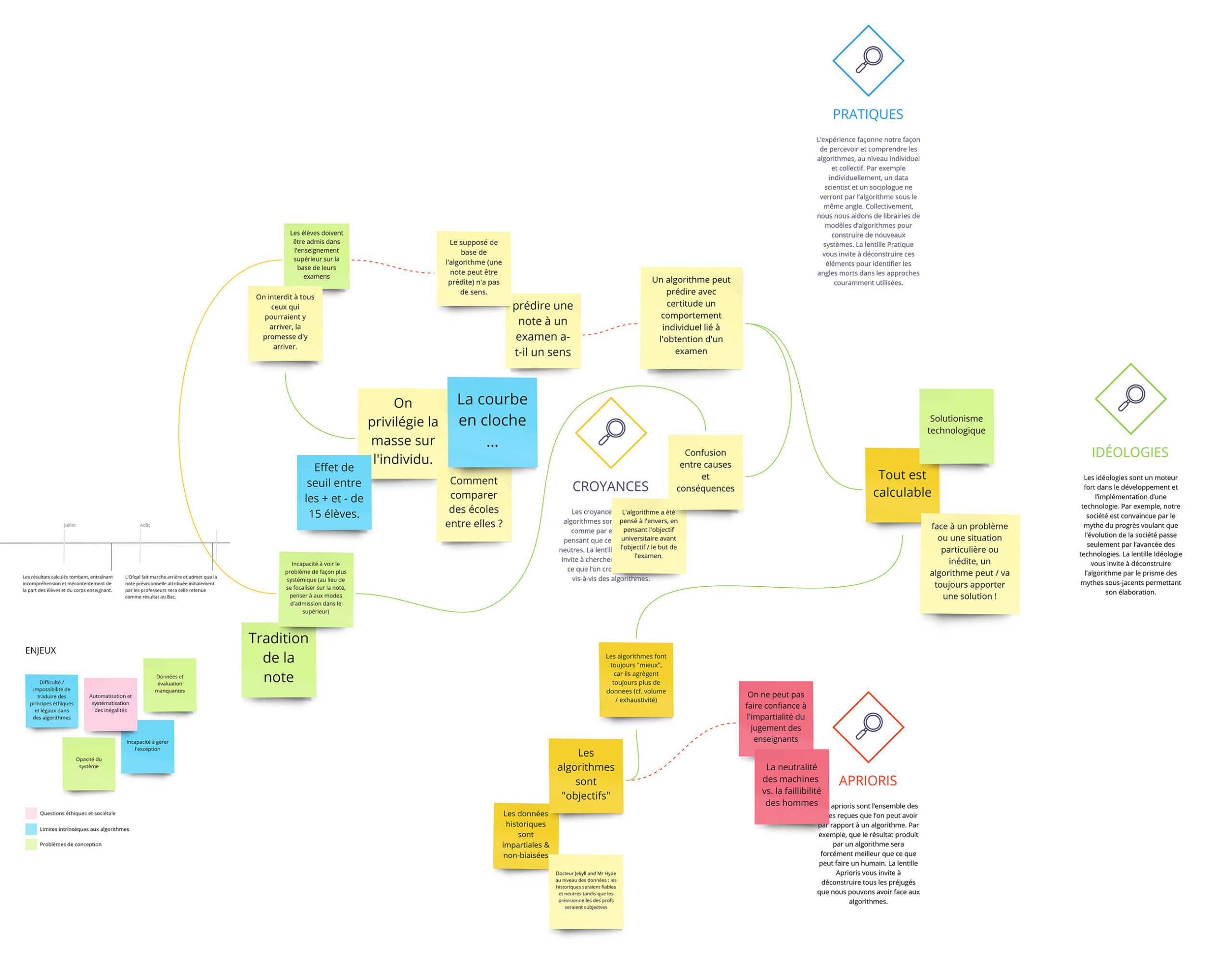

Before getting into the reconstruction of an algorithm, the participants were invited to scrutinise the one that had actually been set up, and to analyse the reasons for its failure. They ended up identifying several strong imaginaries, biases and beliefs that surround algorithms during their conception phase, including:

— Thinking that all problems must be solved by technology (concept of technological solutionism).

— Explaining an algorithm’s failure with a lack of input data, a justification for always collecting more data to bridge the gaps and faults of the algorithm.

— Believing an algorithm and its results are objective, neutral and impartial, because they lie on top of mathematical principles (calculations) and data that describe reality and truth (concept of digital positivism).

— Considering that algorithms are better than humans, here illustrated by thinking high schoolers will get fairer and less biased grades from the algorithm than from their teachers.

— Believing that everything can be calculated and thus considering that one’s exam results can be predictable.

… TO BUILD AN ALTERNATIVE PROCEDURE

The participants were in the shoes of the team tasked to find the solution for the evaluation of UK high schoolers in time of Covid-19. Each person had a specific role and had to make decisions in a limited time. The diagram below shows the chronology of the arguments that appeared over the course of the participants’ discussions.

After they dismissed an algorithm-free solution – a remote exam – for reasons of technological complexity and potential leapfrogging effect, they chose to favour the path of a continuous assessment, using a weighted average of the grades a schooler obtained throughout the year to establish the exam result. In order to make this approach fairer in the light of scoring differences among institutions, this result would be adjusted positively on the basis of a bonus added to the average. And to ensure the scoring equity, a human-driven action is added through a committee that will look for cohesion throughout all grades. The participants also wished to offer a second chance in the form of an oral exam for schoolers about to miss their A-level.

Beyond the result of this algorithm reconstruction, the discussions shone the light on several tension points that may happen in actual, ‘real’ projects.

First, most of the project teams in charge of developing such systems often find themselves executing decisions taken by their hierarchy, with very little to no room for manoeuvre to question an algorithm’s implementation or to negotiate its modalities. This often comes together with a lack of diversity within the teams, making it difficult to take a step back and take a critical look at these projects.

The second point is the ‘chaos dilemma’. The inclusion of problems encountered by public services and their workers happen late in the game, hence their answer must happen immediately. Their backs against the wall, public servants are often forced to accept algorithmic systems as the only viable option to deal with problematic situations in a quick and efficient manner.

Last but not least, this emergency approach finds itself in a project timeframe that is too short and rushed to allow the inclusion, from its conception, of all or part of the bias and ethical issues that may result from the algorithm’s implementation. Facing the emergency, the teams only focus on the technical challenge of the algorithm and the launching of a functional tool, but don’t think about or don’t have the time to anchor their approach with a broader consultation process – getting the future users to participate with a co-conception approach, or gather the opinion of people who will submit to this system.

Going further in exploring the imaginaries related to public algorithms

This workshop was the first step into this exploratory work and was completed with a survey over January 2021. This survey allowed to gather broader feedback on the imaginaries identified during the workshop.

All thoughts and imaginaries have nourished a work of design fiction, with the production of six scenarios that explore the future of public algorithms.